As I've been writing more software, I've realized that there are a few core protocols that are used for building secure applications, notably Open Authorization (OAuth). The OAuth protocol has gone through two iterations, with OAuth 1.0 being the first and OAuth 2.0 being the second. Simply put, OAuth is a protocol that allows users to grant third party applications access to their protected resources. For example, consider a user who signed up for Tableau to perform analysis on their Snowflake warehouse. In this case, Tableau is the third party application, and the user's Snowflake warehouse is the protected resource. Rather than giving Tableau the user's Snowflake credentials, the user can grant Tableau access to their Snowflake warehouse using OAuth. This way, Tableau can access the user's Snowflake warehouse without having to store the user's Snowflake password.

OAuth 1.0

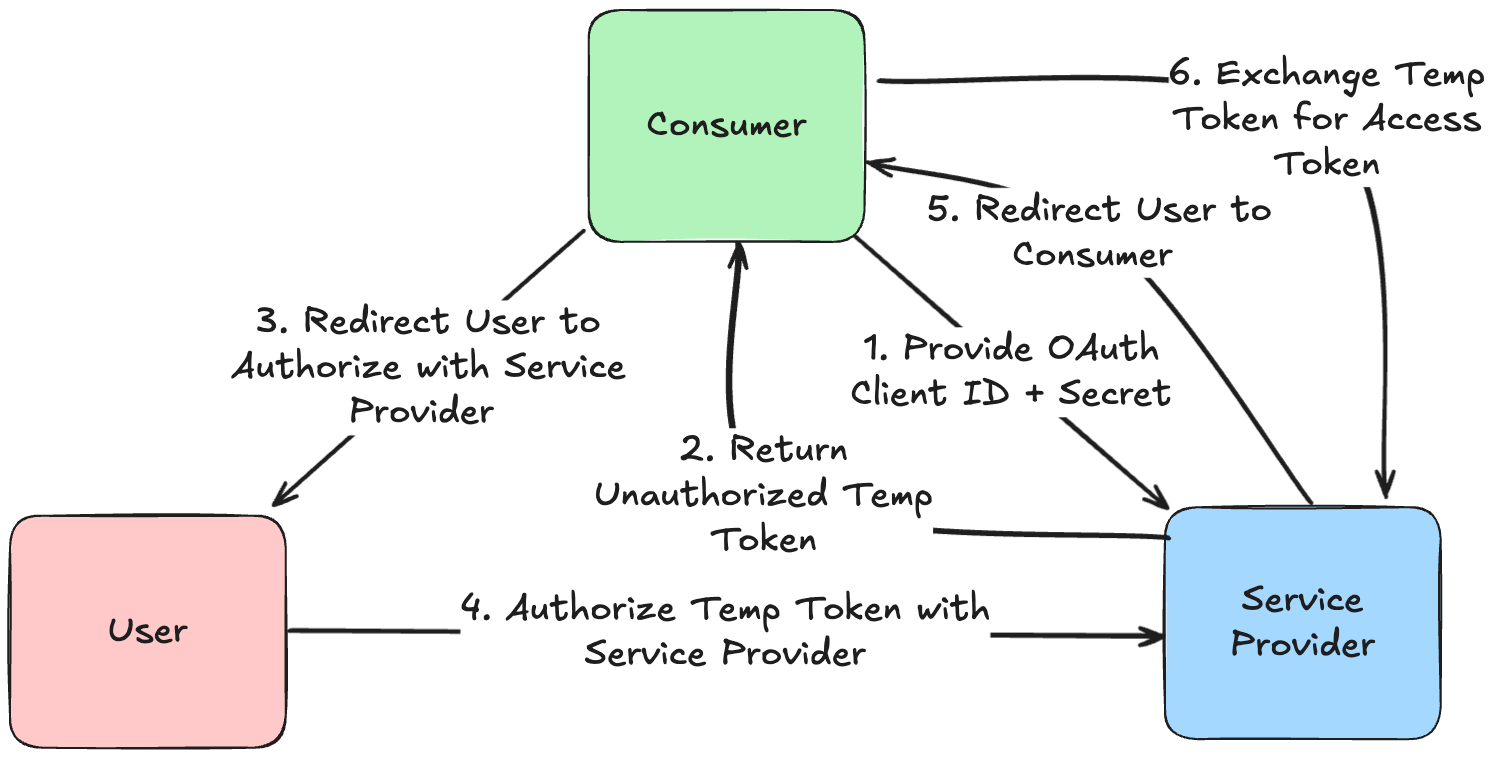

The OAuth 1.0 protocol defines three roles:

- The User who owns the protected resource.

- The Service Provider that hosts the protected resource.

- The Consumer that wants to access the protected resource as a third party application.

- The consumer issues a Temporary Credentials Request to identify itself to the service provider.

- The user performs Resource Owner Authorization to allow the consumer to access the protected resource.

- The consumer issues a Token Request to the service provider to obtain an access token to the user's protected resource.

Limitations

While OAuth 1.0 makes significant progress towards authorization, there are a few limitations to the protocol. First, OAuth 1.0 does not define any standard for refresh tokens. Therefore, access tokens must be long-lived or require the user to reauthorize upon token expiry. Second, OAuth 1.0 was not designed for consumers that cannot securely store secrets. This is because OAuth 1.0 requires every consumer to have a consumer secret for producing signatures. For single-page applications (SPAs) that run entirely in the browser, a malicious actor could easily download the Javascript, leak the consumer secret, and begin to forge signed requests. Third, OAuth 1.0 does not standardize how access scopes should be handled. Typically, the access token granted to a consumer should be scoped to only a specific set of resources. In OAuth 1.0, the specification of scopes is allowed to vary between implementations, leading to potentially unclear semantics, complex implementations, and lack of portability.

OAuth 2.0

The OAuth 2.0 protocol was developed after observing the behavior of OAuth 1.0 in real world use cases. OAuth 2.0 is not backwards compatible with OAuth 1.0 and has quite a lot of differences. At a high level, these include a separate authorization server, flexibile authorization flows, refresh tokens, and scopes. There are some more differences, but these are some of the main ones.

Roles

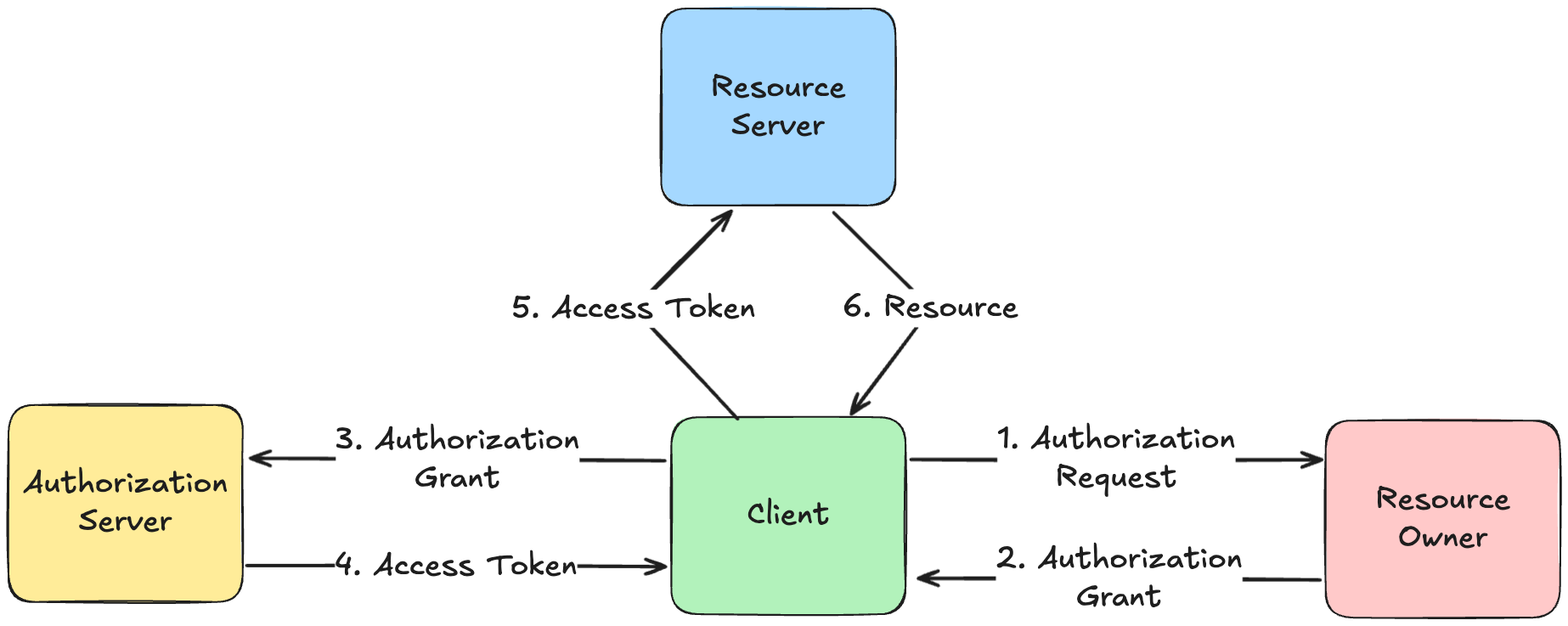

OAuth 2.0 defines four roles:

- The Resource Owner who owns the protected resource.

- The Resource Server that hosts the protected resource.

- The Client that wants to access the protected resource as a third party application.

- The Authorization Server that issues access tokens to the client.

Flows

- Authorization Code: After the resource owner authenticates with the authorization server, the authorization server redirects the resource owner back to the client, with the code query parameter specified in the redirect URI. The code itself could be a high-entropy string that the authorization server stores in a database to verify later, or it could be a JWT with a signature.

- Implicit: Rather than getting an authorization code, the client directly gets an access token from the authorization server after the resource owner authenticates. This has a couple benefits over the authorization code flow. The first is that there is less latency due to less network round trips to get an access token. The second is that it does not require the client to authenticate itself by providing a client secret. This is particularly useful for public clients such as SPAs that cannot securely store secrets. It is worth mentioning that not authenticating the client opens opportunities for attackers. That is why, today, the implicit flow is typically not recommended for public clients. Instead, using authorization code with Proof Key for Code Exchange (PKCE). At a high level, PKCE makes clients generate a random code verifier from which a code challenge can be derived (e.g. the SHA256 hash of the code verifier). When the client tries to trade the authorization code for an access token, it must provider the original code verifier for the authorization server to verify by recomputing the code challenge. With this, public clients can authenticate without needing to persist a vulnerable client secret.

- Resource Owner Password Credentials (ROPC): The authorization server gives the client the resource owner's username and password after authentication. The username and password can then be exchanged for an access token. In practice, ROPC is rarely used since giving the username and password directly to the client presents significant security risks unless the client is highly trusted.

- Client Credentials: If the client is also the resource owner, then the client can use its own client ID and client secret to get back an access token from the authorization server. One setting in which this is useful is in microservice systems where one machine needs to access another service's API. This flow is very efficient as there is just one HTTP request and response.

Refresh Tokens and Scopes

Now that we have discussed the various ways in which a client can get an access token from the authorization server, we are ready to discuss refresh tokens. A refresh token is a token that is optionally issued along with the access token that can be used to generate another access token after the current one expires. Unlike OAuth 1.0, access tokens can be short-lived without requiring the user to reauthenticate. Additionally, refresh tokens can be used to generate new access tokens that have stricter scopes than the original access token. Each access token is associated with a set of scopes, which define permission levels for various resources. The OAuth 2.0 protocol has a standard for how clients should format scopes in HTTP requests, which I will not detail here.

Refresh tokens are generally long-lived compared to access tokens. This is because refresh tokens are not transmitted in API requests, unlike access tokens. Therefore they have a lower attack surface and less chance of being leaked to an attacker. Additionally, refresh tokens are typically invalidated and rotated once they are used to generate a new access token, limiting time for an attacker.

Reliance on TLS

Unlike OAuth 1.0, OAuth 2.0 requires HTTPS with secure transport. This is because OAuth 2.0 includes the access token as a bearer token in the Authorization header when issuing API requests to the resource server. Without TLS, an attacker could then listen on the network and leak the access token from the header. This is in contrast to OAuth 1.0, which relies on the client providing a signature of the request using a key derived from the access token and client secret. In this way, OAuth 2.0 shifts some of the cryptographic complexity away from the protocol and into the transport layer.

Conclusion

Open Authorization (OAuth) is one of the most common authorization protocols on the internet today. It provides a mechanism of granting third party applications access to user resources, which is an important workflow on the web. Here, we covered its original design in OAuth 1.0, as well as the changes in the later OAuth 2.0.